Reward-Sharing Relational Networks in Multi-Agent Reinforcement Learning as a Framework for Emergent Behavior

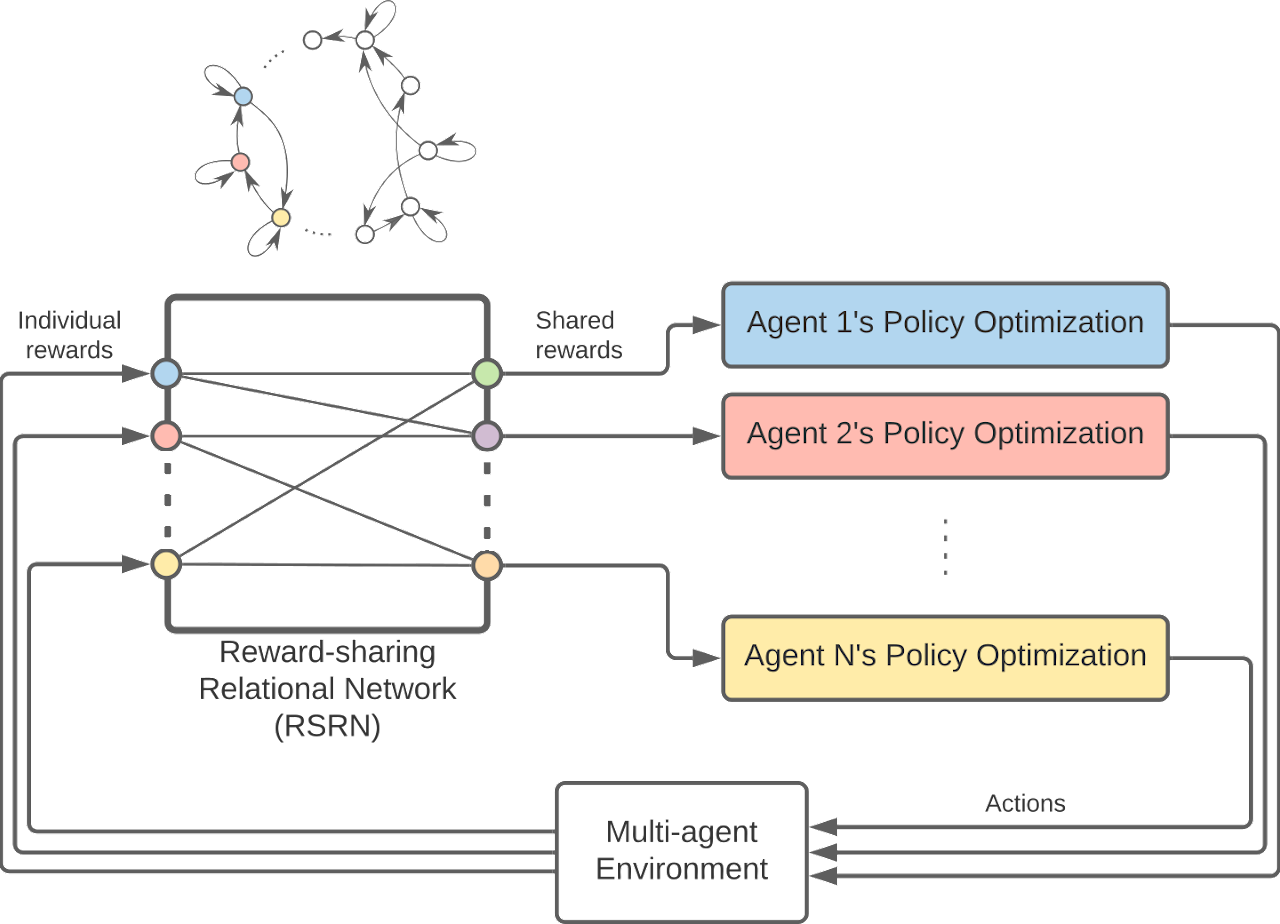

The proposed framework adds a reward sharing block to a typical MARL flow diagram which takes a vector of individual rewards and a user-defined relational network as inputs and gives a vector of shared rewards to the policy optimizer.

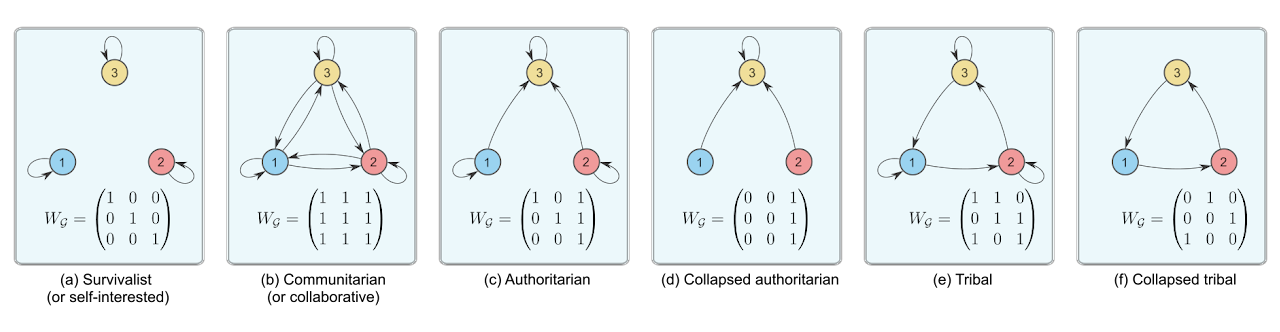

Each of these relational networks is used as an RSRN in the 3-agent MARL system, and the resulting emergent behaviors are examined in Section 5. An agent's actions maymbe governed by the rewards obtained by more than one agent (including itself). An arrow from an agent to itself indicates its actions would maximize the rewards it obtains for itself. An arrow directed from a first agent to a second represents that the first agent's actions are governed by the rewards obtained by the second agent, i.e. an agent it 'cares' about.

3 agents try to cover 3 landmarks while agent 3 (yellow) is systematically slowed down by limiting its possible actions.

Please refer to the Publications page for more outcomes on trust in human-robot interaction.